|

Tips for

enhancing results in DALL-E 2

Expect randomness – the same prompt will produce

better and worse results across multiple attempts.

Picture the type of result you want before creating

the prompt. This encourages a more specific prompt.

Prompt as if you are captioning an existing image

in a newspaper. Read stock photograph descriptions to get a feel for wording

and style as DALL-E 2 was trained on image-caption pairs. Present tense seems

to work best.

Multiple clauses can be used to specify

additional requirements:

Medium

A ballpoint pen lying on a desk, stock photograph

Portrait of a king wearing a golden crown, head and shoulders, renaissance

painting

Source

An astronaut spacesuit in a museum, tourist's photograph

Photograph of a family listening to the record

player, wide shot, life magazine 1970

Lighting

A whole green cucumber and slices, studio lighting

A croquet game on a green lawn, warm outdoor photograph, calm

Camera attributes

Closeup of a wooden lattice, garden visible in background, shallow

depth of field

A man bowling at a ten pin bowling alley, action

shot

Specify camera zoom and angle as DALL-E 2 often

defaults to closeups

Wide shot of a restaurant, diners and wait staff visible

Full shot of a man in fireman's uniform and hat, studio lighting,

stock photograph

Chicken schnitzel closeup

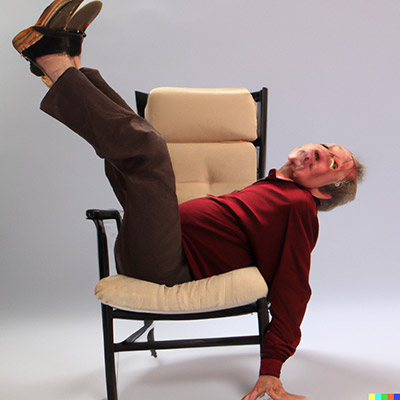

Adjectives can be very effective but are not

consistently applied to the correct noun - keep trying!

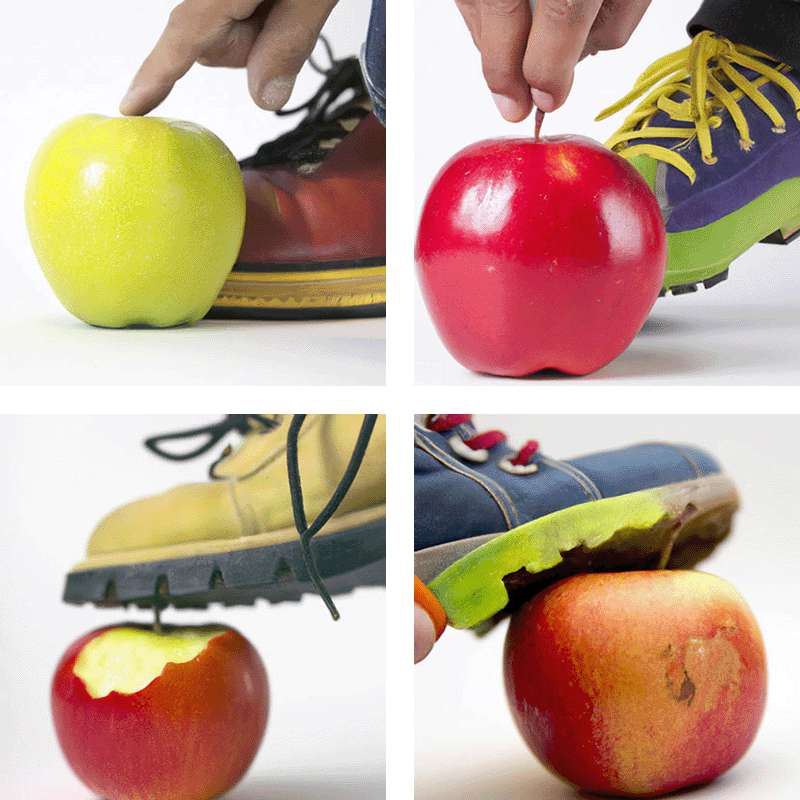

Duplication

has been reported to be effective at focusing on a particular description and

improving its quality, e.g.

A smiling girl is tickled, laughing, bright

lighting, happy

|